Overview

The winners:

| First Place: | aad_freiburg |

| Second Place: | narnars0 |

| Third Place(Three Ties): | wlWangl, thanhdng, Malik |

Please follow the link https://competitions.codalab.org/competitions/17767 for more information.

We announced our competition in NIPS CiML 2017 workshop at December 9, 2017.

Machine learning has achieved great successes in online advertising, recommender system, financial market analysis, computer vision, linguistics, bioinformatics and many other fields, but its successes crucially rely on human machine learning experts. In almost all these successful machine learning applications, human experts are involved in all machine learning stages including: converting real world problems into machine learning problems, collecting data, doing feature engineering, selecting or designing model architecture, tuning model hyper-parameters, evaluating model performance, deploying the machine learning system in online systems and so on. As the complexity of these tasks is often beyond non-experts, the rapid growth of machine learning applications has created a demand for off-the-shelf machine learning methods that can be used easily and without expert knowledge. We call the resulting research area that targets progressive automation of machine learningAutoML(Automatic Machine Learning).The goal of PAKDD 2018 data competition (AutoML Challenge 2018) is to solve real world classification problems without any further human intervention.

There’re two phases of the competition:

The Feedback phase is a phase with result or code submission, participants can practice on 5 datasets that are of similar nature as the datasets of the second phase. Participants can make a limited number of submissions, participants can download the labeled training data and the unlabeled test set. So participants can prepare their code submission at home and submit it later. The LAST submission must be a CODE SUBMISSION, because it will be forwarded to the next phase for final testing.

The AutoML phase is the blind test phase. The last submission of the previous phase is blind tested on five new datasets. Participant’s code will be trained and tested automatically, without human intervention. The final score will be evaluated by the result of the blind testing.

Although the scenario is fairly standard, this challenge introduces the following difficulties:

- Algorithm scalability.We will provide datasets that are 10-100 times larger than in previous challenges we organized.

- Varied feature types.Varied feature types will be included (continuous, binary, ordinal, categorical, multi-value categorical, temporal). Categorical variables with a large number of values following a power law will be included.

- Concept drift.The data distribution is slowly changing over time.

- Lifelong setting.All datasets included in this competition are chronologically splited into 10 batches, meaning that instance batches in all datasets are chronologically ordered (note that instances in one batch are not guaranteed to be chronologically ordered). The algorithms will be tested for their capability of adapting to changes in data distribution by exposing them to successive test batches chronologically ordered. After testing, the labels will be revealed to the learning machines and incorporated in the training data.

Data

Feedback Phase

Public Datasets

5 public datasets. Please go to the competition web page in CodaLab for more information and downloading.

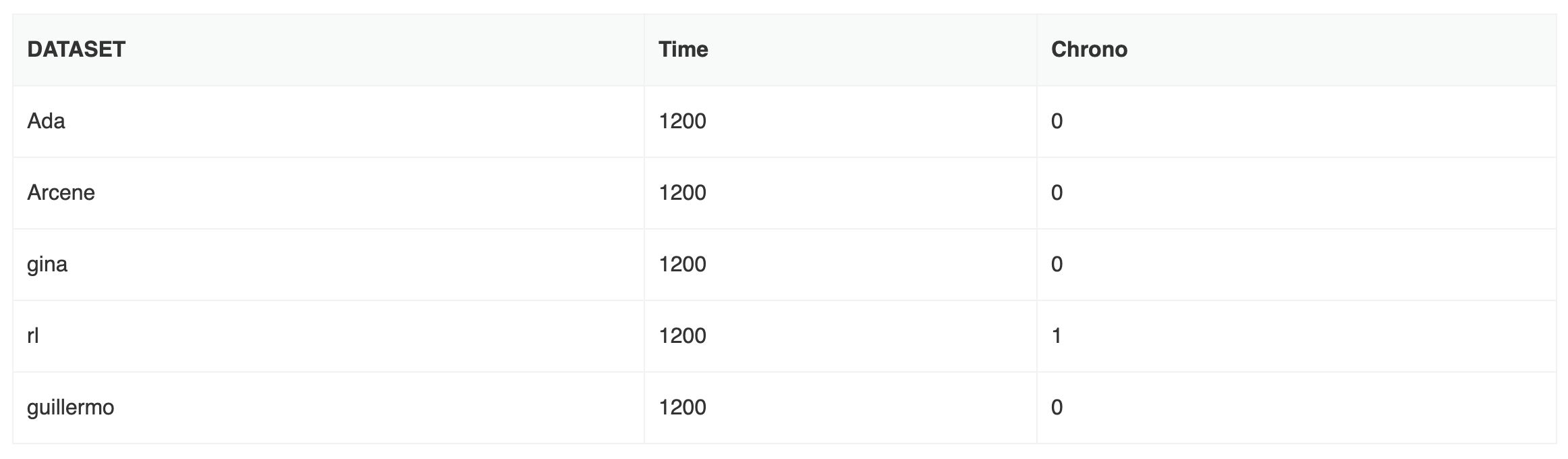

|

|

AutoML Phase

Private Dataset Schemas

5 private datasets to be released.

Prizes

![]()

* A fraction of the prize amount will be used as travel grant to attend the conference and workshop.

Rules

Evaluation

- Performance of submitted results and code will be evaluated by measuring their AUC on testing datasets. The final score will be the average of rankings on all testing datasets, a ranking will be generated from such results, and winners will be determined according to such ranking.

- For the AutoML phase, there is a time budget for each dataset, the testing result should be presented by the learner within the time budget, if not, the AUC will be set to 0.5. Each code submission will be executed in a compute worker with the following characteristics: 2Cores / 8G Memory / 40G SSD with Ubuntu OS.

Terms & Conditions

- The competition will be run in the CodaLab competition platform

- The competition is open for all interested researchers, specialists and students. Members of the Contest Organizing Committee cannot participate.

- Participants may submit solutions as teams made up of one or more persons.

- Each team needs to designate a leader responsible for communication with the Organizers.

- One person can only be part of one team.

- A winner of the competition is chosen on the basis of the final evaluation results. In the case of draws in the evaluation scores, time of the submission will be taken into account.

- Each team is obligated to provide a short report (fact sheet) describing their final solution.

- By enrolling to this competition you grant the organizers rights to process your submissions for the purpose of evaluation and post-competition research.

Dissemination

- Top ranked participants will be invited to attend a workshop collocated with PAKDD 2018 to describe their methods and findings. Winners of prizes are expected to attend.

- The challenge is part of the competition program of the PAKDD2018 conference. Organizers are making arrangements for the possible publication of a book chapter or article written jointly by organizers and the participants with the best solutions.

Timeline

Competition begins: November 30, 2017

Development Phase ends: March 15, 2018

AutoML Phase ends: March 25, 2018

Reports sending ends: March 30, 2018

Reports from selected teams: April 25, 2018

About

Committee

In case of any questions please send an email to automl2018@gmail.com.

- Isabelle Guyon, Université Paris-Saclay & ChaLearn, Paris, France (Adviser)

- Wei-Wei Tu, the Fourth Paradigm Inc., Beijing, China (Co-Chair)

- Hugo Jair Escalante, INAOE (Mexico), ChaLearn (USA) (Co-Chair)

- Yuqiang Chen, the Fourth Paradigm Inc., Beijing, China

- Guangchuan Shi, the Fourth Paradigm Inc., Beijing, China

- Pengcheng Wang, the Fourth Paradigm Inc., Beijing, China

- Rong Fang, the Fourth Paradigm Inc., Beijing, China

- Beibei Xiao, the Fourth Paradigm Inc., Beijing, China

- Jian Liu, the Fourth Paradigm Inc., Beijing, China

- Hai Wang, the Fourth Paradigm Inc., Beijing, China

About AutoML Challenge

Previous AutoML Challenges can be found here.

AutoML workshops can be found here.

Microsoft research blog post on AutoML Challenge can be found here.

KDD Nuggets post on AutoML Challenge can be found here.

I. Guyon et al.A Brief Review of the ChaLearn AutoML Challenge: Any-time Any-dataset Learning Without Human Intervention. ICML W 2016. link

I. Guyon et al.Design of the 2015 ChaLearn AutoML challenge. IJCNN 2015. link

Springer Series on Challenges in Machine Learning. link

About the Fourth Paradigm Inc. (Main Sponsor)

Founded in early 2015, 4Paradigm (https://www.4paradigm.com) is one of the world’s leading AI technology and service providers for industrial applications. 4Paradigm’s flagship product –theAI Prophet– is an AI development platform that enables enterprises to effortlessly build their own AI applications, and thereby significantly increase their operation’s efficiency. Using theAI Prophet, a company can develop a data-driven “AI Core System”, which could be largely regarded as a second core system next to the traditional transaction-oriented Core Banking System (IBM Mainframe) often found in banks. Beyond this, 4Paradigm has also successfully developed more than 100 AI solutions for use in various settings such as finance, telecommunication andInternet applications. These solutions include, but are not limited to, smart pricing, real-time anti-fraud systems, precision marketing, personalized recommendation and more. And while, it is clear that 4Paradigm can completely set up a new paradigm that an organization uses its data, its scope of services does not stop there. 4Paradigm uses state-of-the-art machine learning technologies and practical experiences to bring together a team of experts ranging from scientists to architects. This team has successfully built China’s largest machine learning system and the world’s first commercial deep learning system. However, 4Paradigm’s success does not stop there. With its core team pioneering the research of “Transfer Learning,” 4Paradigm takes the lead in this area, and as a result, has drawn great attention by worldwide tech giants.

About ChaLearn & CodaLab (Platform & Data Provider)

ChaLearn (http://chalearn.org) is a non-profit organization with vast experience in the organization of academic challenges. ChaLearn is interested in all aspects of challenge organization, including data gathering procedures, evaluation protocols, novel challenge scenarios (e.g., coopetitions), training for challenge organizers, challenge analytics, results dissemination and, ultimately, advancing the state-of-the-art through challenges. ChaLearn is collaborating with the organization of the PAKDD 2018 data competition (AutoML Challenge 2018).

The competition will be run in the CodaLab platform (https://competitions.codalab.org). CodaLab is an open-source web-based platform that enables researchers, developers, and data scientists to collaborate, with the goal of advancing research fields where machine learning and advanced computation is used. CodaLab offers several features targeting reproducible research. In the context of the AutoML Challenge 2018, CodaLab is the platform that will allow the evaluation of participants solutions. Codalab is administered by CKcollab, LLC. This will be possible by the funding of 4Paradigm and a Microsoft Azure for Research grant.